Fluid directed Rigid Ball using Deep Reinforcement Learning

Course project from CIS563 (Physics based simulations) during my 1st semester at Penn. This is an interdisciplinary project at the intersection of simulations, computer graphics, and machine learning. It is based on a 2018 paper. A detailed report of this project can be found here.

Languages:

- C++

- Python

- Matlab

Libraries:

- Gym by OpenAI

- Keras (backend: Tensorflow)

- Eigen (linear algebra in C++)

- Partio (data I/O)

- Stable-baselines

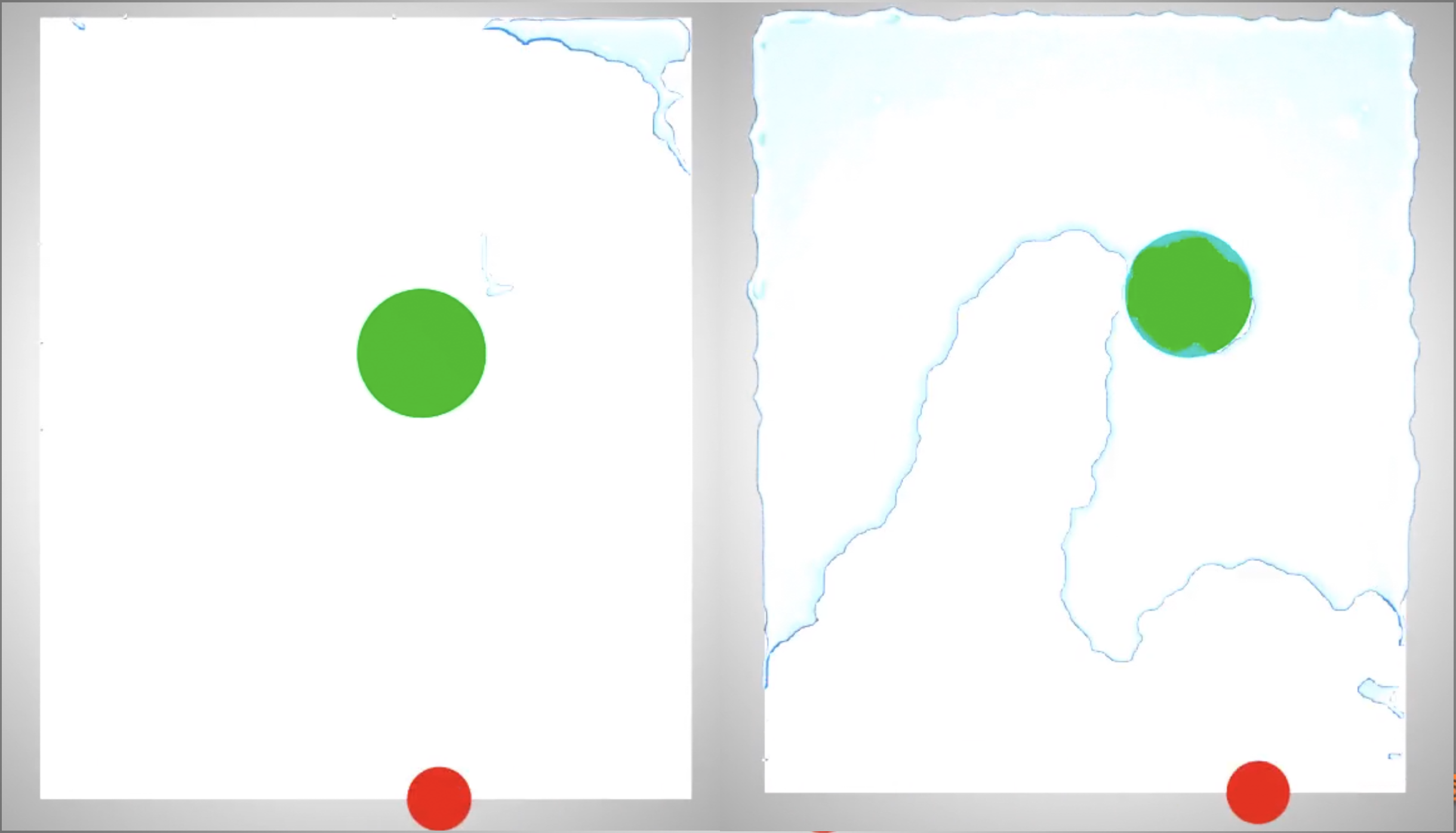

Two different modeling approaches were mixed to solve the problem at hand. The task was to balance a rigid ball in air using a jet of water, which is controlled by a neural network. The motion of ball is based on the classical laws of physics (deterministic approach), whereas the movement of the water jet is based on a probabilistic approach as the neural network learns to evaluate the best set of moves for a given state of the environment.

I planned the project in various stages.

- Physics Simulator: The motion of the ball and fluid is modeled using Newtons laws and Navier Stokes equations and these are numerically integrated to simulate this coupled motion. I followed a variational pressure framework approach desribed by Prof. Batty in his 2007 paper. I wrote this code in C++11 using a supporting library called Eigen for handling linear algebra operations.

- C++ to Python: As most of the machine learning libraries are based on Python, I had to port the simulator (from C++) to Python. For this I used an interface compiler called SWIG (Simplified Wrapper and Interface Generator), which allowed to package the entire C++ code into a single shared library file that could be imported directly in Python. This provided a convenient way to simulate the fluid-ball motion in Python at an added advantage of fast computations as the backend was still in C++.

- Gamify the Simulator: As I was trying to solve a control problem using Reinforcement Learning, I turned my attention to a popular library used for Reinforcement Learning: Gym by OpenAI. Gym provides a clean and modular interface in Python for doing reinforcement learning, and supports methods to reset simulation, time stepping in simulator etc. I wrapped the simulator library from SWIG using Gym library to what is called as an Environment.

- Pre-training decisions: The original paper used an autoencoder in the learning task to reduce the dimensions of the state space of the environment (which is high dimensional due to the fluid field). I tried this approach and designed a convolutional autoencoder using Keras. Although the autoencoder did a pretty good job at dimensionality reduction, I didn’t end up using it because it needed to be trained at every iteration of learning algorithm. This was a difficult task considering the time constraints. So, I came up with a new approch: a moving window that tracks the position of the ball and extends symmetrically around it.

- Training task: Although OpenAI has a baselines library which provides integration with a Gym environment, but I found lots of bugs and the project seemed to break often. I used an alternative called stable-baselines, which builds over the baselines library with clean and bug free implementation.